Julie Muzina

on 13 August 2024

Visual Testing: GitHub Actions Migration & Test Optimisation

What is Visual Testing?

Visual testing analyses the visual appearance of a user interface. Snapshots of pages are taken to create a “baseline”, or the current expectation of how each page should appear. Proposed changes are then compared against the baseline. Any snapshots that deviate from the baseline are flagged for review.

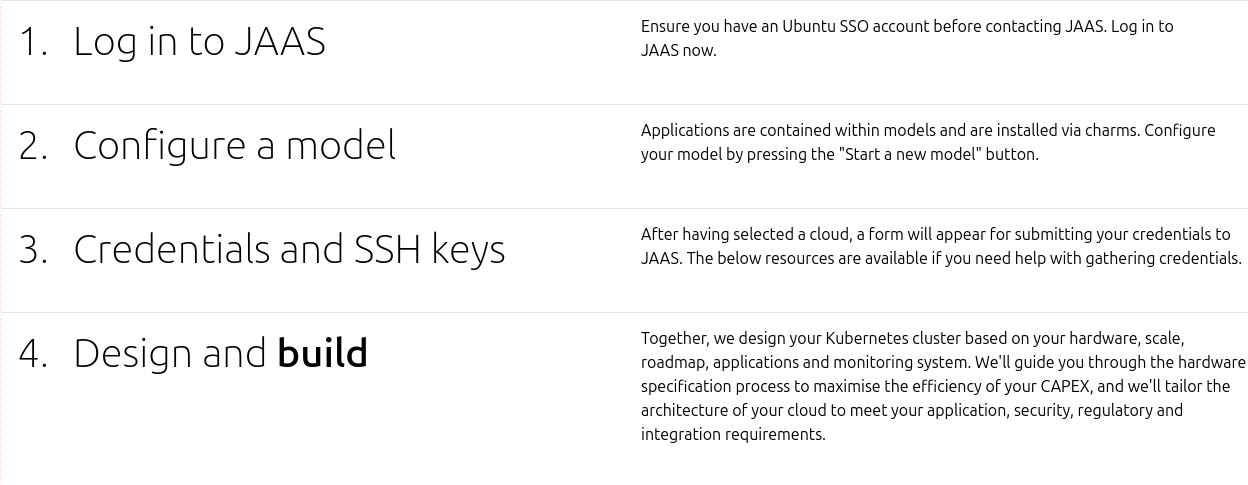

For example, consider these two images:

To the untrained eye, these snapshots look very similar. You might be able to tell that they are different, but not know specifically how they are different, or what code changes may have occurred to cause that change.

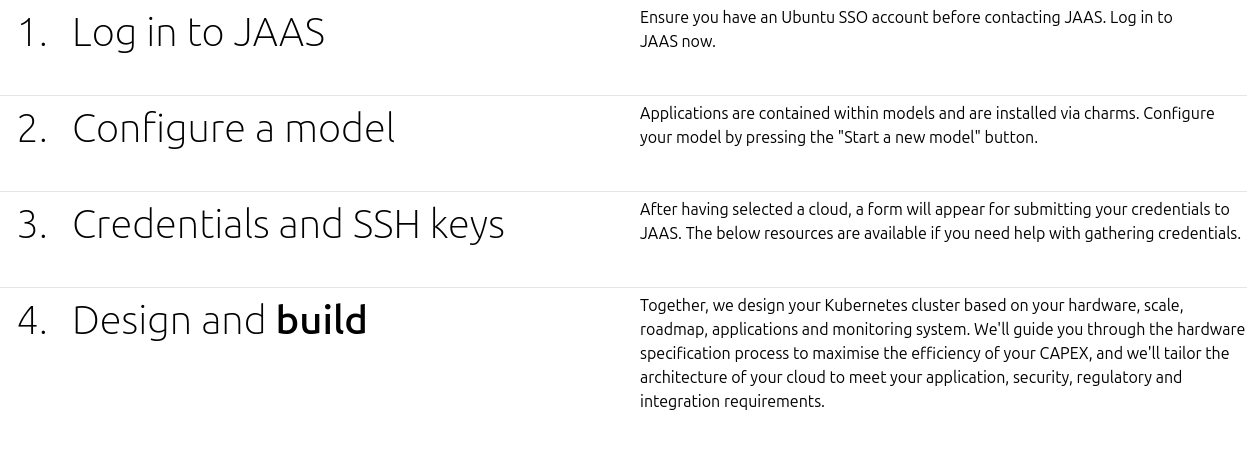

Now let’s look at how Percy (a visual testing service by Browserstack) sees this comparison:

The differences between the snapshots are highlighted in bright red. The further down the page we look, we see that the text and horizontal rules in the two images grow further apart vertically. This suggests an issue with content spacing, potentially in element height, margin, or padding. Visual testing helps identify and prevent visual regressions, and empowers maintainers to introduce visual changes in a controlled manner.

Our Use Case

Canonical’s Web team maintains Vanilla Framework, a component library used by web properties across Canonical’s entire web presence. Changes made to Vanilla affect all of our downstream projects. As with all component libraries, visual testing is essential: it helps us prevent visual regressions before they reach our users, ensuring that our applications look and feel exactly as we intend them to.

Vanilla’s web site includes hundreds of usage examples, which we use to demonstrate our look and feel, and to help developers build sites with confidence by using our examples as templates. We use Percy to test each one of these examples on every pull request. This allows us to monitor visual changes to our design system with confidence.

The Problem

Initially, Vanilla used CircleCI to perform visual testing. We chose CircleCI because it can run workflows for fork pull requests with access to secret tokens, which GitHub actions cannot do. CircleCI checked out the source code from each pull request, merged main into the source to make sure the latest changes were tested, built & ran Vanilla, & performed visual testing – all in one workflow.

In our old workflow, every pull request (regardless of which files were changed or what state of readiness it was in) was tested on every commit. Pull requests are regularly updated several times during the review process, and each pull request synchronisation event would cause another test to be run. We recursively captured every Vanilla component example markup file in our project, which caused the screenshots to quickly add up. Each test captured around 1,400 screenshots. Each PR caused thousands of screenshots, which often put us in danger of going over our screenshot budget.

Goals for Improvement

We aimed to achieve the following workflow improvements:

- Migrate from CircleCI to GitHub Actions for consistency with our other CI workflows

- Decrease the total number of tests we run

- Decrease the total number of screenshots taken per test

Our Solution

Migrating to GitHub Actions

Following an approach recommended by the GitHub security team, we implemented a two-workflow approach to take Percy snapshots and minimise security risks from running workflows against forked pull requests.

Prepare Workflow

A “prepare” workflow runs on every pull request push. This workflow uploads styling, example templates, and pull request metadata into a GitHub Actions artefact, which will be picked up by the next workflow. By only using the template and styling from the pull request, we reduce the amount of pull request code that will be used by the snapshot workflow.

name: "Prepare Percy build"

on:

workflow_call:

inputs:

pr_number:

required: true

type: number

description: "Pull request number of the pull request that initiated this build"

commitsh:

required: true

type: string

description: "Signature of the current HEAD commit for this pull request"

jobs:

copy_artifact:

name: Copy changed files to GHA artifact

runs-on: ubuntu-latest

steps:

- name: Checkout repo

uses: actions/checkout@v4

with:

# Only check out the files needed for testing visual changes to examples from the pull request

sparse-checkout: |

templates/docs/examples/

scss/

- name: Populate artifact directory

run: |

mkdir -p artifact

# Ensure the directories that will be copied exist

mkdir -p templates/docs/examples

mkdir -p scss

# Copy the testing files into the artifact

cp -R templates/docs/examples/ scss/ artifact/

# Archive the PR number associated with this workflow since it won't be available in the workflow_run context

# https://github.com/orgs/community/discussions/25220

- name: Archive PR data

if: github.event_name=='pull_request'

working-directory: artifact

run: |

echo ${{ inputs.pr_number }} > pr_num.txt

echo ${{ inputs.commitsh }} > pr_head_sha.txt

# Upload the artifact so that it can be used by the snapshots workflow

- name: Upload artifact

uses: actions/upload-artifact@v4

with:

name: "percy-testing-web-artifact"

path: artifact/*

# Completion of this workflow will trigger the snapshots workflow via workflow_runSnapshot Workflow

A “snapshot” workflow runs on completion of the prepare workflow, using GitHub’s workflow run trigger. This workflow checks out the current version of main as well as the styling, templates, and PR metadata from the prepare workflow artefact. It replaces the styling and templates from main with the code from the pull request. It then builds, runs, and tests Vanilla using Percy.

Percy’s baseline needs to be updated on pushes to main. To run Percy the same way on pull requests and merges to main, we created a composite action to build, run, and visually test Vanilla. A composite action was chosen so that the building/testing code can be called as a step by other workflows without duplicating code.

name: Percy Snapshot - composite/reusable action

description: Starts Vanilla local server, captures example snapshots, & uploads them to Percy

inputs:

pr_number:

required: false

description: Identifier of a pull request to associate with this Percy build. I.E. github.com/{repo}/pull/{pr_number}

commitsh:

required: true

description: SHA signature of commit triggering the build

branch_name:

required: true

description: Name of the branch that Percy is being run against

percy_token_write:

required: true

description: Percy token with write access

runs:

using: composite

steps:

- name: Install Python

uses: actions/[email protected]

with:

python-version: 3.10.14

- name: Install Node

uses: actions/[email protected]

with:

node-version: 20

- name: Install dependencies

shell: bash

run: yarn install && pip3 install -r requirements.txt

- name: Build Vanilla

shell: bash

run: yarn build

- name: Start testing server

shell: bash

run: ./entrypoint 0.0.0.0:8101 &

- name: Wait for server availability

shell: bash

run: |

sleep_interval_secs=2

max_wait_time_secs=30

wait_time_secs=0

while [ "$wait_time_secs" -lt "$max_wait_time_secs" ]; do

if curl -s localhost:8101/_status/check -I; then

echo "Server is up!"

break

else

wait_time_secs=$((wait_time_secs + sleep_interval_secs))

if [ "$wait_time_secs" -ge "$max_wait_time_secs" ]; then

echo "[TIMEOUT ERROR]: Local testing server failed to respond within $max_wait_time_secs seconds!"

exit 1

else

echo "Waiting for server to start..."

sleep "$sleep_interval_secs"

fi

fi

done

- name: Take snapshots & upload to Percy

shell: bash

env:

PERCY_TOKEN: ${{ inputs.percy_token_write }}

PERCY_BRANCH: ${{ inputs.branch_name }}

PERCY_COMMIT: ${{ inputs.commitsh }}

PERCY_PULL_REQUEST: ${{ inputs.pr_number }}

PERCY_PAGE_LOAD_TIMEOUT: 120000

# snapshots.js is a JS module that returns an array of Percy snapshot objects

# See https://www.browserstack.com/docs/percy/take-percy-snapshots/snapshots-via-cli#advanced-options for expected output structure

run: npx percy snapshot snapshots.jsWe then created a workflow that ran tests on proposed changes from pull requests by using the artefact from the pull request prepare workflow.

# This workflow listens for completion of the prepare workflow, picks up its output, and performs Percy testing.

name: "Percy screenshots"

on:

workflow_run:

workflows:

- "Percy (pushed)"

- "Percy (labeled)"

types:

- completed

jobs:

upload:

name: Build project with proposed changes & take Percy snapshots

if: github.event.workflow_run.conclusion=='success'

runs-on: ubuntu-latest

steps:

- name: Checkout main

uses: actions/checkout@v4

- name: Remove directories that will be replaced by artifact files

run: rm -rf templates/docs/examples/ scss/

- name: Download artifact from pull request prepare workflow

uses: actions/download-artifact@v4

with:

name: "percy-testing-web-artifact"

github-token: ${{ secrets.GITHUB_TOKEN }}

repository: ${{ github.event.workflow_run.repository.full_name }}

run-id: ${{ github.event.workflow_run.id }}

- name: Move artifact files to replace deleted source files

run: |

# ensure the examples directory exists, so that no error occurs if the PR didn't have en examples directory

mkdir -p examples

# artifact directory contains `scss/`, `examples/`, `pr_num.txt`, and `pr_head_sha.txt`.

# `/examples` must be moved to `templates/docs`.

mv examples/ templates/docs/.

# Extracts the PR metadata from the artifact

- name: Get PR data

if: github.event.workflow_run.event=='pull_request'

id: get_pr_data

run: |

echo "sha=$(cat pr_head_sha.txt)" >> $GITHUB_OUTPUT

echo "pr_number=$(cat pr_num.txt)" >> $GITHUB_OUTPUT

- name: Take snapshots & upload to Percy

uses: "./.github/actions/percy-snapshot"

with:

branch_name: "pull/${{ steps.get_pr_data.outputs.pr_number }}"

pr_number: ${{ steps.get_pr_data.outputs.pr_number }}

commitsh: ${{ steps.get_pr_data.outputs.sha }}

percy_token_write: ${{ secrets.PERCY_TOKEN_WRITE }}

Baseline Workflow

Visual testing relies on comparing proposed changes against an approved baseline test result. We consider the main branch to be the approved version of our source. Therefore, each push to main causes a Percy test to be run. This test’s results then become the new baseline until the next push to main.

We created a workflow that updates the baseline on pushes to main.

name: Update Percy Baseline

on:

push:

branches:

- main

jobs:

snapshot:

name: Take Percy snapshots

runs-on: ubuntu-latest

steps:

- name: Checkout main

uses: actions/checkout@v4

# Run Percy test against the new version of main, and set the results to baseline

- uses: ./.github/actions/percy-snapshot

with:

branch_name: main

commitsh: ${{ github.sha }}

percy_token_write: ${{ secrets.PERCY_TOKEN_WRITE }}Reducing Percy Usage

Enhancing Test Selectivity

In order to decrease the total number of tests that we run, we started running our visual tests more selectively. We identified the following cases as a minimal set of scenarios that call for visual testing on pull requests:

- Pushes to non-draft pull requests to main that change our styling and/or template files

- Pushes to any pull request to main that has the “Review: Percy needed” label. The label is used to manually request Percy testing, even when a PR does not change styling or template files.

- The “Review: Percy needed” label was added to a pull request to main

The paths specifier is used to filter out pull requests that don’t change styling or template files. However, we ran into an interesting problem when combining directory filtering with label filtering. The paths filter acts like a logical AND at the workflow level, so we couldn’t use just one workflow to run all of our prepare jobs. Pull requests with the “Review: Percy needed” label need to be tested, regardless of which files they changed. However, this was not possible with one workflow. So, we created two different workflows: a prepare-label workflow, and a prepare-push workflow. Both of these workflows call a reusable workflow that performs the test preparation logic, with different filtering controlling job triggering.

The label workflow handles pull requests that have specifically been marked for Percy testing.

# This workflow ensures Percy is executed against PRs with the Percy required label, regardless of which paths were changed

name: "Percy (labeled)"

on:

pull_request:

branches:

- main

types:

# workflow runs after a label is added to the PR, or when a commit is added to a PR with the Percy label

- labeled

- synchronize

jobs:

copy_artifact:

name: Copy changed files to GHA artifact

# Run if commits were pushed to a PR with the Percy label, or if the Percy label was added to a PR

if: "(github.event.action == 'synchronize' && contains(toJson(github.event.pull_request.labels.*.name), 'Review: Percy needed')) || (github.event.action == 'labeled' && github.event.label.name == 'Review: Percy needed')"

uses: ./.github/workflows/percy-prepare.yml

with:

pr_number: ${{ github.event.number }}

commitsh: ${{ github.event.pull_request.head.sha }}

# Completion should trigger the snapshots workflow.The push workflow handles pull requests that make changes to any files that are relevant for visual testing. It also ignores draft pull requests.

# This workflow runs Percy against non-draft PRs that have changes in relevant filepaths.

name: "Percy (pushed)"

on:

pull_request:

branches:

- main

# Only runs on PRs that change directories relevant to visual testing

paths:

- "templates/docs/examples/**"

- "scss/**"

types:

- opened

- synchronize

jobs:

copy_artifact:

name: Copy changed files to GHA artifact

# Ignore draft PRS and PRs with the Percy label

# If we run tests against PRs with the Percy label, we will run tests twice (test is also ran by the labelling workflow)

if: "!github.event.pull_request.draft && !contains(toJson(github.event.pull_request.labels.*.name), 'Review: Percy needed')"

uses: ./.github/workflows/percy-prepare.yml

with:

pr_number: ${{ github.event.number }}

commitsh: ${{ github.event.pull_request.head.sha }}

# Completion should trigger the snapshots workflow.Reducing Snapshot Volume

Capturing every example as a separate view (even when a component may only be as large as 200x100px) led to a very large amount of screenshots per test. To reduce the number of screenshots taken per test, we created new “combined” examples of each component, and rendered the contents of every component variant in that directory within a single view.

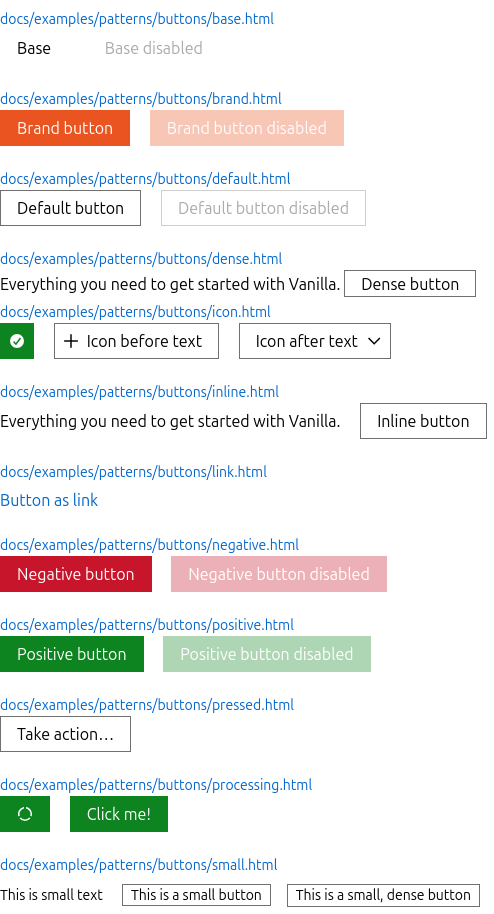

Below is a screenshot of the base button example from before the snapshot reduction effort. This page was captured twice (once on mobile, and once on desktop). The rest of the button variant examples were also captured twice each. This led to dozens of screenshots, just to cover the button examples.

All button variant examples were combined into a single view. This combined example is captured twice, covering all of the button variants and saving dozens of screenshots for buttons alone.

By combining all of these examples into a single test case, we can test significantly more examples per screenshot, reducing our total screenshot usage.

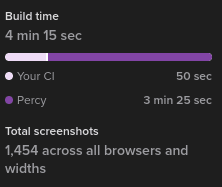

Outcome

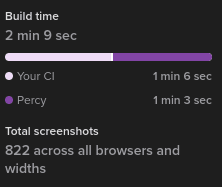

We dramatically reduced our total Percy usage, and increased the speed of our visual tests. We perform visual testing towards the end of a pull request’s review process, instead of once per push to the PR. By combining our examples into larger views, we significantly reduced our screenshots usage per test from ~1,400 to ~800, and decreased Percy’s build time from ~4 minutes to ~2 minutes.

We could have decreased our screenshots usage even further, but the dramatic drop in screenshots per test allowed us to increase our test coverage by capturing every example in each of our colour themes, which would have required an unsustainable number of screenshots per test prior to this effort. By running Percy more selectively and combining our example test cases into larger views, we were able to reduce our test usage while increasing our test coverage.

Future Plans

- Reduce the screenshot usage of our baseline tests by only updating the baseline when files that change the visual appearance of Vanilla are changed. This would require careful consideration of which files should trigger a test, as we want to avoid Vanilla’s visual appearance differing from our main branch.

- Migrate our React Components library’s visual testing integration from CircleCI to GitHub Actions.