robgibbon

on 18 August 2021

If you’ve followed the steps in Part 1 and Part 2 of this series, you’ll have a working MicroK8s on the next-gen Ubuntu Core OS deployed, up, and running on the cloud with nested virtualisation using LXD. If so, you can exit any SSH session to your Ubuntu Core in the sky and return to your local system. Let’s take a look at getting Apache Spark on this thing so we can do all the data scientist stuff.

Call to hacktion: adapting the Spark Dockerfile

First, let’s download some Apache Spark release binaries and adapt the dockerfile so that it plays nicely with pyspark:

wget https://archive.apache.org/dist/spark/spark-3.1.2/spark-3.1.2-bin-hadoop3.2.tgz

tar xzf spark-3.1.2-bin-hadoop3.2.tgz

cd spark-3.1.2-bin-hadoop3.2

echo > Dockerfile <<EOF

#

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

FROM ubuntu:20.04

ARG spark_uid=185

# Before building the docker image, first build and make a Spark distribution following

# the instructions in http://spark.apache.org/docs/latest/building-spark.html.

# If this docker file is being used in the context of building your images from a Spark

# distribution, the docker build command should be invoked from the top level directory

# of the Spark distribution. E.g.:

# docker build -t spark:latest -f kubernetes/dockerfiles/spark/Dockerfile .

ENV DEBIAN_FRONTEND noninteractive

RUN set -ex && \

sed -i 's/http:\/\/deb.\(.*\)/https:\/\/deb.\1/g' /etc/apt/sources.list && \

apt-get update && \

ln -s /lib /lib64 && \

apt install -y python3 python3-pip openjdk-11-jre-headless bash tini libc6 libpam-modules krb5-user libnss3 procps && \

mkdir -p /opt/spark && \

mkdir -p /opt/spark/examples && \

mkdir -p /opt/spark/work-dir && \

touch /opt/spark/RELEASE && \

rm /bin/sh && \

ln -sv /bin/bash /bin/sh && \

echo "auth required pam_wheel.so use_uid" >> /etc/pam.d/su && \

chgrp root /etc/passwd && chmod ug+rw /etc/passwd && \

rm -rf /var/cache/apt/*

COPY jars /opt/spark/jars

COPY bin /opt/spark/bin

COPY sbin /opt/spark/sbin

COPY kubernetes/dockerfiles/spark/entrypoint.sh /opt/

COPY kubernetes/dockerfiles/spark/decom.sh /opt/

COPY examples /opt/spark/examples

COPY kubernetes/tests /opt/spark/tests

COPY data /opt/spark/data

RUN pip install pyspark

RUN pip install findspark

ENV SPARK_HOME /opt/spark

WORKDIR /opt/spark/work-dir

RUN chmod g+w /opt/spark/work-dir

RUN chmod a+x /opt/decom.sh

ENTRYPOINT [ "/opt/entrypoint.sh" ]

# Specify the User that the actual main process will run as

USER ${spark_uid}

EOFContainers: the hottest thing to hit 2009

So Apache Spark runs in OCI containers on Kubernetes. Yes, that’s a thing now. Let’s build that container image so that we can launch it on our Ubuntu Core hosted MicroK8s in the sky:

sudo apt install docker.io

sudo docker build . --no-cache -t localhost:32000/spark-on-uk8s-on-core-20:1.0We will use an SSH tunnel to push the image to our remote private registry on MicroK8s. Yep, it’s time to open another terminal and run the following commands so we can set up a tunnel to help us to do that:

GCE_IIP=$(gcloud compute instances list | grep ubuntu-core-20 | awk '{ print $5}')

UK8S_IP=$(ssh <your Ubuntu ONE username>@$GCE_IIP sudo lxc list microk8s | grep microk8s | awk -F'|' '{ print $4 }' | awk -F' ' '{ print $1 }')

ssh -L 32000:$UK8S_IP:32000 ssh <your Ubuntu ONE username>@$GCE_IIPPush it real good: publishing our Spark container image

Make sure you leave that terminal open so that the tunnel stays up, and switch back to the one you were using before. The next step is to push the Apache Spark on Kubernetes container image we previously built to the private image registry we installed on MicroK8s, all running on our Ubuntu Core instance on Google cloud:

sudo docker push localhost:32000/spark-on-uk8s-on-core-20:1.0And we’ll set up Jupyter so that we can launch and interact with Spark. Hop back into a terminal that has an SSH session open to the Ubuntu Core instance on GCE, and run the following command to launch a Jupyter notebook server on k8s. (Now would be a good time to stretch your legs because it’ll take a few minutes to complete)

sudo lxc exec microk8s -- sudo microk8s.kubectl run --port 6060 \

--port 37371 --port 8888 --image=ubuntu:20.04 jupyter -- \

bash -c "apt update && DEBIAN_FRONTEND=noninteractive apt install python3-pip wget openjdk-11-jre-headless -y && pip3 install jupyter && pip3 install pyspark && pip3 install findspark && wget https://archive.apache.org/dist/spark/spark-3.1.2/spark-3.1.2-bin-hadoop3.2.tgz && tar xzf spark-3.1.2-bin-hadoop3.2.tgz && jupyter notebook --allow-root --ip '0.0.0.0' --port 8888 --NotebookApp.token='' --NotebookApp.password=''"Get certified: ensuring TLS trust

We need the CA certificate of our MicroK8s’ Kubernetes API to be available to Jupyter so that it will trust the encrypted connection with the API. So we’ll need to copy the CA cert over to our Jupyter container. Again, in the terminal with the SSH session open to the Ubuntu Core instance on GCE, run the following command:

sudo lxc exec microk8s -- sudo microk8s.kubectl cp /var/snap/microk8s/current/certs/ca.crt jupyter:. Next, we’ll expose Jupyter as a Kubernetes service. We will then be able to set up another tunnel and reach it from our workstation’s browser, and our Spark executor instances will be able to call back to the Spark driver and block manager. Use the following commands:

sudo lxc exec microk8s -- sudo microk8s.kubectl expose pod jupyter --type=NodePort --name=jupyter-ext --port=8888

sudo lxc exec microk8s -- sudo microk8s.kubectl expose pod jupyter --type=ClusterIP --name=jupyter --port=37371,6060Oh yes oh yes, I feel that it’s time for another SSH tunnel through to the LXC container so that we’ll be able to securely reach the Jupyter notebook server from our workstation’s browser over an encrypted link – remember to leave the terminal open so that the tunnel stays up.

J_PORT=$(ssh <your Ubuntu ONE username>@$GCE_IIP sudo lxc exec microk8s -- sudo microk8s.kubectl get all | grep proxy-public | awk '{ print $5 }' | awk -F':' '{ print $2 }' | awk -F'/' '{ print $1 }')

ssh -L 8888:$UK8S_IP:$J_PORT ssh <your Ubuntu ONE username>@$GCE_IIPLanding on Jupyter: testing our Spark cluster from a Jupyter notebook

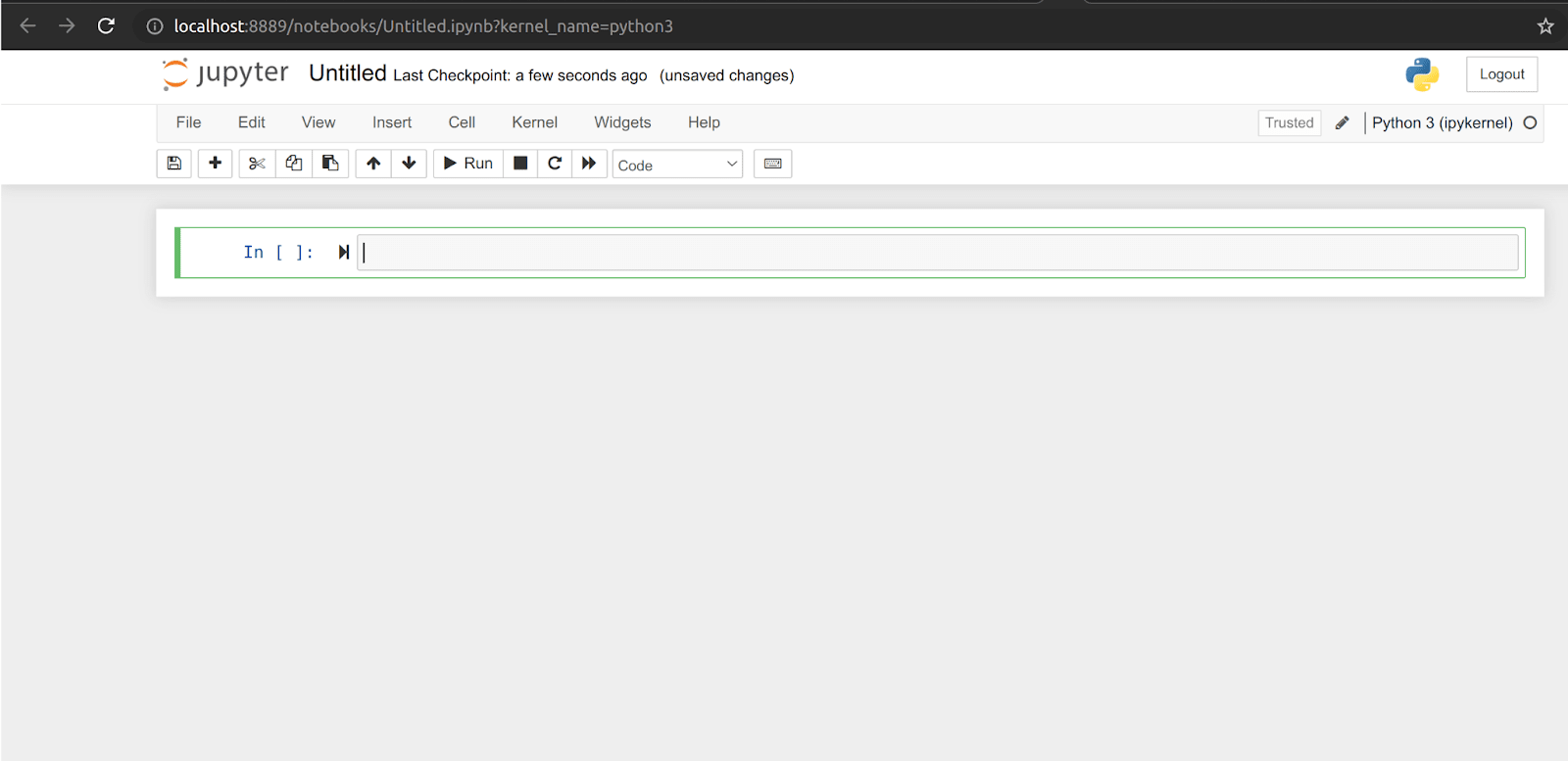

Now you should be able to browse to Jupyter using your workstation’s browser, nice and straightforward. Just follow the link:

In the newly opened Jupyter tab of your browser, create and launch a new iPython notebook, and add the following Python script. If all goes well, you’ll be able to launch a Spark cluster, connect to it, and execute a parallel calculation when you run the stanza.

import os

os.environ["SPARK_HOME"] = "/spark-3.1.2-bin-hadoop3.2"

import pyspark

import findspark

from pyspark import SparkContext, SparkConf

findspark.init()

conf = SparkConf().setAppName('spark-on-uk8s-on-core-20').setMaster('k8s://https://kubernetes.default.svc')

conf.set("spark.kubernetes.container.image", "localhost:32000/spark-on-uk8s-on-core-20:1.0")

conf.set("spark.kubernetes.allocation.batch.size", "50")

conf.set("spark.io.encryption.enabled", "true")

conf.set("spark.authenticate", "true")

conf.set("spark.network.crypto.enabled", "true")

conf.set("spark.executor.instances", "5")

conf.set('spark.kubernetes.authenticate.driver.caCertFile', '/ca.crt')

conf.set("spark.driver.host", "jupyter")

conf.set("spark.driver.port", "37371")

conf.set("spark.blockManager.port", "6060")

sc = SparkContext(conf=conf)

print(sc)

big_list = range(100000000000000)

rdd = sc.parallelize(big_list, 5)

odds = rdd.filter(lambda x: x % 2 != 0)

odds.take(20)Tour de teacup: summary

Ok, we didn’t do anything very advanced with our Spark cluster in the end. But in Part 4, we’ll take this still further. The next step is going to be all about banding together a bunch of these Ubuntu Core VM instances using LXD clustering and a virtual overlay fan network. With this approach, we will build your own highly available, fully distributed MicroK8s powered Kubernetes cluster. Turtles all the way down! It’d be cool to have that up on the cloud as a “hardcore” (pardon my punning), zero-trust-security hardened, alternative way to run all the things.

See you again in Part 4!